Here's a follow-up post to my first "sad legacy of Everyday Math" post, in which I concluded by saying that

You can’t blame the mathematical deficiencies of these 4th and 5th graders on their parents: both the private school and the after school program select for parents who care about education. You can’t blame it on the kids: my kids, who clearly wanted to learn, had been admitted [to our after school program] in part based on their behavior.

Picking up from there, the second post proceeded as follows:

You also can't blame it on language problems; these kids are

fluent in English. In fact, there's really only one thing outside the Everyday

Math curriculum that one can possibly point a finger to, and that is that these

immigrant parents (many of them don't speak English) don't realize what many

native-born parents already know: namely, that they can't count on the schools

to fully educate their children.

So these kids are a case study in what happens when you leave math instruction

entirely up to Everyday Math practitioners. And the answers to this question

are slowly coming in.

For several of the 5th graders I work with, it turns out that not only do they

not know how to borrow across multiple digits; they also don't know their basic

addition and subtraction "facts." In other words, they don't

automatically know that, say 5 plus 7 is 12, or that 15 - 8 is 7; instead they

count on their fingers.

This got me thinking about addition and subtraction "facts." Back in

my day, there was no issue of kids learning these facts as such. Yes, we

memorized our multiplication tables. But we never set about deliberately

memorizing that 5 plus 7 is 12. Why? Because the frequency of the much-maligned

"rote" calculations we did ensured that we, in today's lingo, constructed this

knowledge on our own.

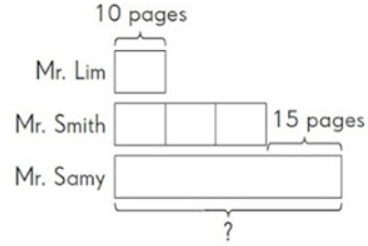

Back in my day, a typical third grade arithmetic sheet looked something like

this:

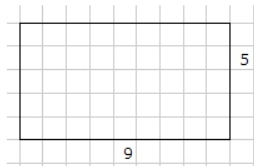

And a typical fourth grade arithmetic sheet looked something like this:

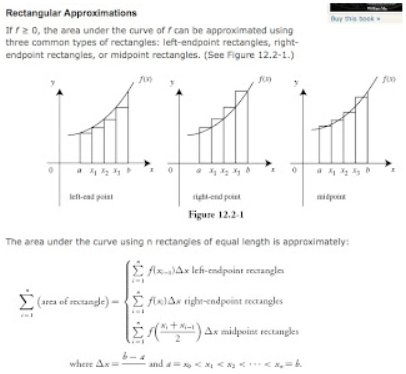

But in Reform Math programs like Everyday Math, such pages filled with calculations are only occasional, and each problem involves a much shorter series of calculations. Here's a set from 4th grade Everyday Math:

Each multi-digit addition problem amounts to a series of simple addition

problems. For example, adding two two-digit numbers involves adding at least

two pairs of numbers; three if one is regrouping. Adding three three-digit

numbers can involve 8 iterations of simple addition. Some of the problems in

the second traditional math sheet involve as many as 17 iterations of simple

addition.

In the traditional 4th grade math scenario, we may have had 25 problems per day

like those in the first two sheets above, 5 days a week. With Everyday Math,

you might get, at best, 25 problems like those in the second two sheets

above per week.

Putting it all together, the resulting difference in the amount of practice

with basic addition "facts" is quite large. 5 (days) times 25

(problems) times (say, as an average of iterations of simple addition) 10 for

the traditional math curriculum versus 1 (day) times 25 (problems) times

(average iterations) 3 for the Everyday Math curriculum. Assuming I'm not

screwing up my arithmetic, that's 1,250 vs. 75 basic

addition calculations per week. No wonder so many of those who are educated

exclusively through Everyday Math don't know their "addition facts"

by grade 5!

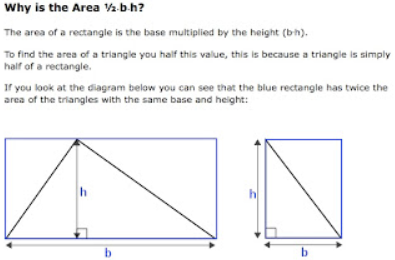

Ah, but surely their "conceptual understanding" is deeper. Note the

calls for "ballpark estimate" at the bottom of each Everyday Math

problem, where traditional math simply has you calculate. Stay tuned: in my

next post on this topic, I'll discuss the state of conceptual understanding in

my Everyday Math mal-educated 5th graders.